Introduction

In the realm of artificial intelligence and data science, deep learning stands out as a groundbreaking field, revolutionizing the way machines learn and make decisions. At the core of this technological marvel are neural networks, specifically deep neural networks, which have become the cornerstone of many sophisticated applications. In this blog post, we will embark on an enlightening journey into the depths of deep learning, exploring the intricacies of neural networks and various architectures that drive this transformative technology.

Understanding Deep Learning

Deep learning is a subset of machine learning that leverages artificial neural networks to simulate the human brain’s ability to process information and make decisions. At its essence, deep learning involves training neural networks on vast amounts of data to recognize patterns and make predictions or decisions without explicit programming.

Neural Networks in a Nutshell

Neural networks are computational models inspired by the structure and functioning of the human brain

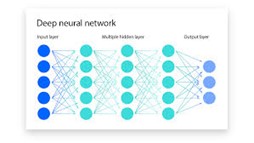

They consist of interconnected nodes, or artificial neurons, organized in layers. These layers include the input layer, hidden layers, and the output layer. Each connection between neurons is associated with a weight, and the network learns by adjusting these weights during the training process.

Deep Neural Networks (DNNs)

Deep neural networks take the concept of neural networks to the next level by incorporating multiple hidden layers. The depth of these networks allows them to learn hierarchical representations of data, capturing intricate features and patterns. As a data scientist, working with deep neural networks enables you to tackle complex problems such as image and speech recognition, natural language processing, and more.

Artificial Neural Networks (ANNs)

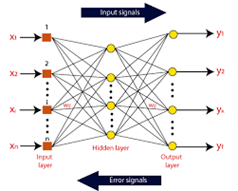

Artificial neural networks, often used interchangeably with neural networks, are the foundation of deep learning. They consist of layers of interconnected nodes, each performing specific computations. Input data is fed into the network, and through the process of forward propagation, predictions are generated. Backpropagation, the optimization algorithm, adjusts the weights during training to minimize the difference between predicted and actual outcomes.

Deep Learning Architectures

Several architectures have emerged within the deep learning landscape, each tailored for specific tasks and domains. Some notable architectures include:

Convolutional Neural Networks (CNNs): Ideal for image recognition, CNNs use convolutional layers to detect patterns and spatial hierarchies within images.

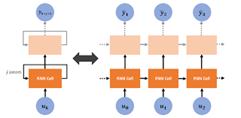

Recurrent Neural Networks (RNNs): Suited for sequential data, RNNs process information in a sequential manner, making them effective for tasks like language modeling and time series analysis.

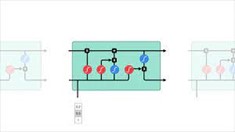

Long Short-Term Memory Networks (LSTMs): An extension of RNNs, LSTMs address the vanishing gradient problem, making them suitable for tasks requiring memory of past inputs.

Conclusion:

Deep learning, with its neural networks and diverse architectures, continues to push the boundaries of what machines can achieve. As a data scientist, mastering these concepts opens a world of possibilities for solving complex problems and contributing to the ever-evolving field of artificial intelligence. Stay tuned for more in-depth explorations into the fascinating realm of deep learning!