How a Pakistani Entrepreneur’s Bold Vision Led to a $1.7 Billion Acquisition: The Story of Rehan Jalil and Securiti AI

How a Website Can Skyrocket Your Business Growth in 2025

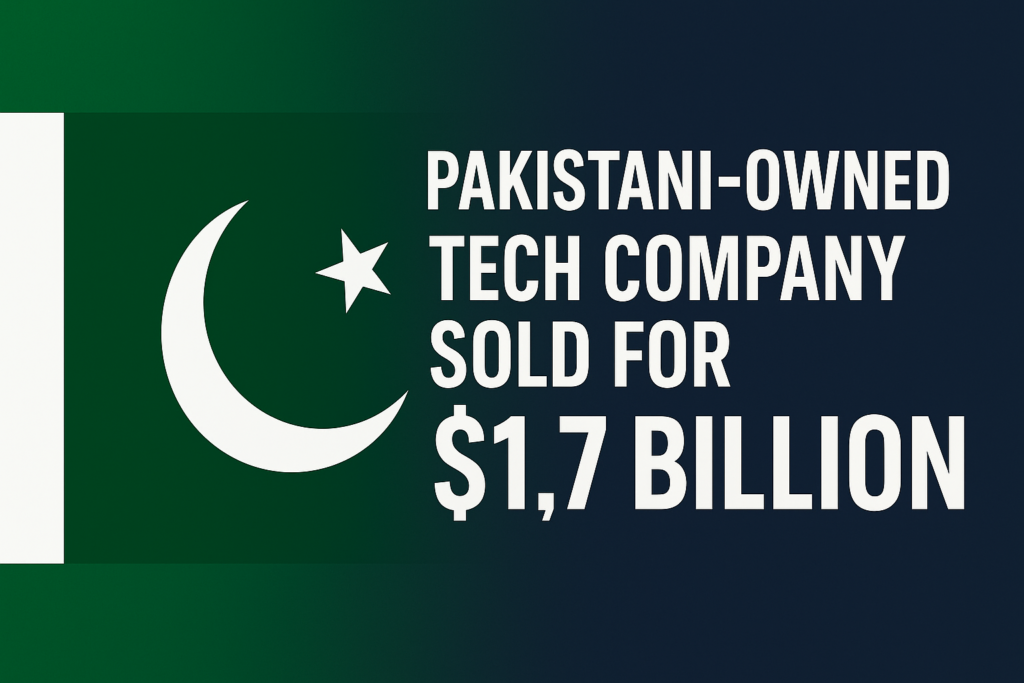

This article presents a practical, beginner-to-intermediate guide on the most essential linear algebra concepts every data scientist must understand. It covers:

• Vectors and their use in representing data points and calculating similarity.

• Matrices, which are used for storing datasets and performing transformations.

• Linear equations that form the foundation of regression models.

• Eigenvalues, eigenvectors, and SVD, crucial for dimensionality reduction techniques like PCA.

• Concepts such as orthogonality, projections, and vector spaces, which support optimization and machine learning algorithms.

Each section connects the theory directly to its application in machine learning, data processing, NLP, and deep learning — making it both educational and actionable for real-world data science projects.

Google Pay (Google Wallet) Launches in Pakistan – A Game Changer for Digital Payments!

Explore OpenAI’s revolutionary AI video generator, Sora. Learn how this cutting-edge tool simplifies video creation, enhances creativity, and opens new possibilities for content creators, educators, and businesses

Cracking the Data Science Interview for Fresh Graduates

Breaking into the field of data science as a fresh graduate can be challenging, but the right preparation can set you apart. Here’s a quick overview of what you need to know to ace your data science interviews:

1. Master the Basics: Strengthen your foundation in statistics, programming (Python, SQL), and machine learning.

2. Build a Portfolio: Showcase projects like predictive modeling, time series analysis, and data visualization using platforms like GitHub.

3. Prepare for Common Questions: Be ready to answer technical, statistical, and behavioral questions. Examples include:

• What is overfitting, and how can it be avoided?

• Explain the difference between supervised and unsupervised learning.

4. Practice Problem-Solving: Use platforms like Kaggle, HackerRank, and LeetCode to enhance your skills.

5. Focus on Domain Knowledge: Tailor your preparation to industries like finance, healthcare, or retail to demonstrate relevance.

For a detailed guide with answers to the 30 most popular interview questions, visit our complete article. Success lies in preparation—get started today!

Use this snippet for your blog to attract readers and encourage them to explore the full article for deeper insights.

In the realm of data science, tools and technologies are indispensable. They enable data scientists to extract insights, build predictive models, and solve complex problems efficiently. This article…

Data Science Professional and Workflow Strategist

1: Introduction Are you dreaming of becoming a billionaire? While it may seem like an impossible feat, with the right mindset and strategy, it’s within reach. Who knows,…

The tech industry is one of the most rapidly growing and profitable industries in the world. In recent years, the industry has seen a surge in the number…